Thought Leadership in Your Inbox

23 February 2024

How do you shape a GenAI mindset?

To realise the value of GenAI it's not enough to create experiences that build adoption. Organizations should also focus on developing a GenAI mindset.

19 October 2023

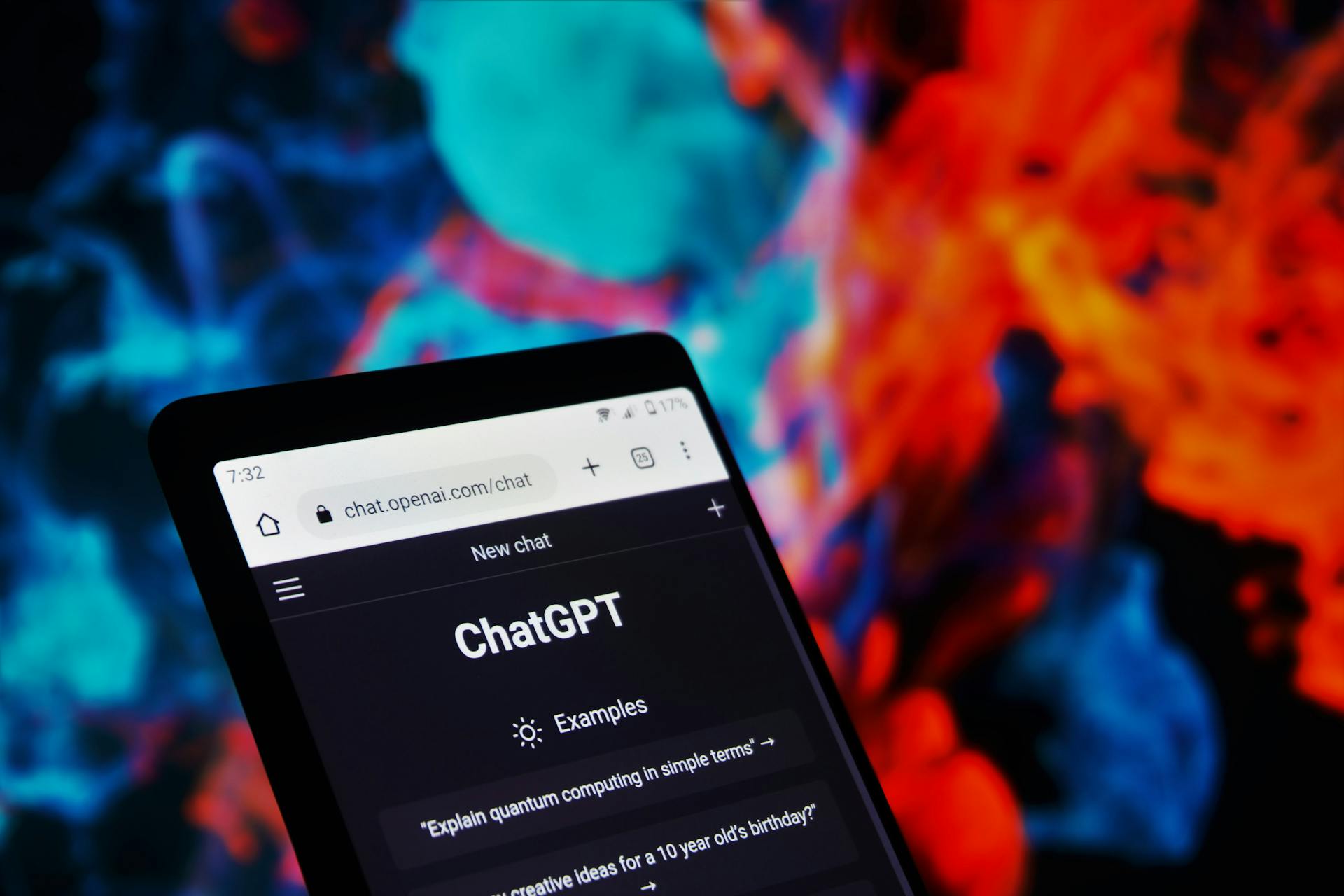

ChatGPT - What you should do next

There's so much buzz about ChatGPT, but how can you assist your organization in adopting it safely and ethically? Chief Strategy Officer, Colin Sloman discusses how Cognician can help.

18 July 2023

Change Made Easy

Why is change so hard? And how can it be made easy? In this thought-provoking blog post, our Chief Strategy Officer, Colin Sloman, delves into the challenges of change and offers insights on how to make it easier. Drawing on his expertise in change management, transformational change, and behavioral science, Colin shares captivating stories of both failures and successes in the realm of change. Join him as he explores the evolution of change and its ongoing impact on organizations.

17 March 2023

It's as Simple as A, B, ... (and C)

"How do you know when real change has occurred?" This is a question our Chief Strategy Officer, Colin Sloman, has asked many times. Colin shares his thoughts on using A/B Testing and data to deliver meaningful results that track change in large organizations.

24 January 2023

Change is a doing sport

Practice drives progress, making change a doing sport. Colin Sloman suggests an approach to learning that involves more than just knowing, but also understanding, reflecting, and practicing to bring about lasting change.

13 December 2022

2020s, the Coming of Age of HR. No, Really!

The 2020s mark the beginning of a new era for Human Resources. Colin Sloman examines the future 'Decade of HR' and the transition from administration to talent management, recruitment, and retention. What does today's workforce need and how will HR fare moving forward in addressing these needs?

15 November 2022

Cloud Adoption: Change Their Behavior or Change Your Mind

Change begins with planning and processes, but it starts with people. Colin Sloman explores what will be needed for people to adapt to and adopt the migration to cloud technology.

25 October 2022

Delivering Transformative Change – Engaging One Mind at a Time

Is transformative change just another buzzword losing its buzz? Colin Sloman discusses how transformative change becomes more than just a phrase, but a reality.

6 September 2022

Change Hearts, Minds, and Actions Through Activation. Here's How:

Cognician co-founder Patrick Kayton discusses our unique approach to behavior change. And how it can improve your change initiatives.

18 August 2022

Hybrid Workplace Success Relies on Leaders. What Can We Do to Make Sure They Succeed?

Hybrid working is the future. It's crucial to equip leaders to get it right. Colin Sloman discusses how Cognician can help.

2 August 2022

Data is Everywhere. Does Your Organization Know What to Do With It?

Data fluency is increasingly important for today’s organizations. Colin Sloman discusses why and how Cognican can help.

29 June 2022

Your Approach to Onboarding Acquired Hires Can Make or Break Your M&A

How you engage and onboard acquired hires can make or break a merger or acquisition. Colin Sloman discusses why and how Cognican can help.

3 May 2022

Sustainability Is No Longer an Option but the Only Way Forward for Businesses

The transition to sustainability doesn't have to be impossible. Our Chief Strategy Officer explains why and how Cognician can help.

5 April 2022 | Feature

Designing for Evidence Using Mechanistic Explanations

Mechanistic explanations are one way we provide reliable evidence of behavior change. The following article discusses how.

29 March 2022

Exploring the Role of Discovery in the Development of Our Learning Programs

How do we activate behavior change? This article discusses our discovery process for creating our custom programs.

22 March 2022

Why Diversity and Inclusion Are Important for Problem-Solving

What happens when we bring in new perspectives? This article explores this and how to activate inclusive problem-solving.

15 March 2022

How the Great Resignation Must Lead to the Great Onboarding

Good onboarding can ease the effects of the Great Resignation. Our CSO, Colin Sloman, explains why and how Cognician can help.

15 March 2022

By Becoming Aware of Your Unconscious Bias You Can Activate Behavior Change

Using one powerful idea, we show you how to become aware of your unconscious biases that reinforce negative stereotypes.

8 March 2022

Leaders Who Listen: How to Collaborate in Diverse Teams

Nothing says "collaboration" quite like the launch of a space rocket.

1 March 2022

How Mentoring Can Activate a Commitment to Lifelong Learning

"Wax on! Wax off!" As mentoring goes, who can forget the powerful lessons learned in the iconic movie The Karate Kid?

22 February 2022

Activate an Innovation Culture by Adopting These Four Habits

Successful companies continuously embrace new ways of thinking. In this article, we will show managers how to make innovation a habit.

15 February 2022

Three Ways Mentoring Can Activate Teams Success [For Leaders]

How do you become an effective mentor to your team? This article discusses three actions you can take as a leader.

8 February 2022

How Reverse Mentoring Activates Organizational Success

By turning the tables on traditional peer mentoring, reverse mentoring can activate new perspectives and lessons in the workplace.

1 February 2022 | Feature

Has The 'Great Crew Change' Finally Arrived?

Cognician CSO, Colin Sloman, discusses the challenges and importance of reskilling oil and gas workers for a sustainable future.

11 January 2022 | Feature

Find Your Next Big Idea with Insight Analysis

Ever wanted to understand how your employees feel about specific topics? Through Insight Analysis, you can do just that.

4 January 2022

What Termites Teach Us about Activating Change

Using a termite colony as an example, we discuss the power of collective action when activating behavior change at scale.

28 December 2021

Does Social Interaction Improve Learning Outcomes?

We review the work of Zhang et al. (2017) to show that social engagement is vital in completing online learning programs.

21 December 2021

Why Don't Team Building Activities Work?

Team building can feel like a chore. This article explores how we can make these activities more meaningful and effective.

14 December 2021

The Powerful Role of Reflection in Activating Behavior Change

What is the best way to learn? This article explores the crucial importance of reflection in learning outcomes.

8 December 2021 | Feature

This Is Not a Drill: How Should WPC Rise to the Challenge of a Net-Zero Future?

Will the most significant contributor to climate change rise to the challenge of a net-zero carbon future?

25 November 2021

How to Track the Impact of a Cybersecurity Training Program

Tracking the impact of a training program comes back to the fundamentals of learning: Does the program activate behavior change within your teams?

22 November 2021 | Feature

How Do Diversity and Inclusion Enhance Organizations?

We know intuitively that diversity matters. It’s also increasingly clear that it makes sense in purely business terms.

18 November 2021 | Feature

How Much Does Cybersecurity Training Cost?

In this article, we'll show you the most cost-effective ways to activate security behaviors that defend your organization.

15 November 2021 | Feature

COP26: What Comes Next?

We discuss what is next for organizations in the wake of COP26 and how they can rapidly adopt a sustainability agenda.

10 November 2021 | Feature

The Big Question about COP26 and Why It's Important to Start Small

COP26 preparation is in full flow! So it is not surprising that many clients are asking how to drive a mindset shift within their organizations on climate, carbon, and a sustainable future for the planet.

4 November 2021 | Feature

How Long Does It Take to Develop Security Maturity?

In this article, we discuss how to develop security maturity in 30 days or less using an activation approach.

26 October 2021 | Feature

Leveraging the Zone of Proximal Development in Cybersecurity Training

Understanding the skill level of your team is essential when designing InfoSec training experiences.

12 October 2021 | Feature

The Four Failures of Cybersecurity Training

Could your information security training be better? Here’s how you can improve it.

6 October 2021 | Feature

The Role of Discovery in Building Award-Winning Learning Programs

Discovery is key to building relevant and valuable behavior-change programs that are measurably effective.

4 October 2021 | Feature

How Cognician Builds Gamification into Learning to Activate Behavior Change

Wondering how to leverage gamification? Here’s how we trigger powerful emotions and maximize engagement in our change programs.

14 September 2021 | Feature

Why Cybersecurity Training Is Failing Employees and How You Can Win

Quality training needs to activate behavior change, develop a cybersecurity mindset in employees and cultivate good habits.

13 August 2021

What Makes a Leader Successful at Activating Change?

It takes a great leader to change behaviors in any organization. But what are the qualities of a leader who successfully activates change?

6 July 2021 | Feature

What Are the Three Steps to Successful Behavior Change?

Successful behavior change can be simple, if done correctly. Here, we cover three steps needed to activate behavior change.

20 May 2021 | Feature

What is an Agile Leader?

Agility is an important characteristic for businesses —and leaders too. But what makes a leader 'agile'?

18 May 2021 | Fast Facts

3 Ways Transformational Conversations can Activate Behavior Change

At Cognician, we understand that conversation is key to activating behavior change. Find out why here...

6 May 2021 | Feature

How to Measure the Impact of a Behavior Change Program: Why Cause Matters

Once a behavior change program has been put in place, how can you measure its effectiveness? Here, we explain the process.

4 May 2021 | Fast Facts

3 More Power Skills Needed to Develop in the “New Normal”

In our previous blog, we looked at the need for enhancing communication skills, adaptability and analytical thinking in employees to meet the demands of the “New Normal”.

27 April 2021 | Fast Facts

3 Power Skills HR and Change Managers Need to Develop in the “New Normal”

It’s no secret that the way businesses operate has been disrupted forever. As the R.E.M. song goes, “It’s the end of the world as we know it… and I feel fine!”

22 April 2021 | Feature

What Are Soft Skills and Why Are They Important?

Soft skills are just as important as hard skills in the workplace, but what exactly are they?

20 April 2021 | Fast Facts

3 Ways Habit Formation Can Transform Teams

Whether it’s being negative, missing deadlines or showing up late for meetings, bad habits can have a bad impact on your team.

8 April 2021 | Feature

4 Ways to Build a Feedback Culture at Work

Giving and receiving feedback is critical for business success, but how do you develop a feedback culture at work?

6 April 2021 | Fast Facts

3 Ways Simplicity Can Activate Behavior Change

There’s something to be said for simplicity. Especially when it comes to learning programs that claim to drive behavior change. As the saying goes, all that glitters is not gold.

1 April 2021 | Opinion Piece

Why Manners are Key to Driving Change at Work

Manners play an important role in building trust, and trust is instrumental when it comes to driving behavior change at work.

29 March 2021 | Fast Facts

3 Facts about Fostering Teamwork that Change Managers Should Know

These fast facts explore how change managers can develop more effective, productive, and cohesive teams, improve communication, and foster harmony.

24 March 2021 | Expert Q&A

The Quest for Change: Why Replicating Excellence is Key

When it comes to behavior change, should you be fixing issues or trying to replicate success? Find out in this Q&A with Dr Mark Houghton.

15 March 2021 | Fast Facts

3 Ways to Develop People at Work

Talent management isn’t only about recruiting and retaining the best employees you can find. It’s also about helping them become the best they can be at what they do and how they do it.

8 March 2021 | Fast Facts

3 Reasons Why Women Make a Positive Impact in the Workplace

Today, as we celebrate International Women's Day, we pay respect to all women who have been driving change in the workplace.

4 March 2021 | Opinion Piece

How Problem-Based Learning Can Activate Behavior Change

Problem-based learning can activate behavior change in organizations far more effectively than micro-learning.

2 March 2021 | Opinion Piece

Why Inspiration Is a Great Enabler of Change—and How You Can Inspire

To activate behavior change within a team or organization, you need to enable inspiration to come from anyone, anywhere.

1 March 2021 | Fast Facts

5 Lessons Change Managers Can Learn from Seymour Papert

Seymour Papert's constructionist theory has played a key role in learning efforts. What can change managers learn from him?

25 February 2021 | Feature

Why Content Isn't Always the Answer for Change Management Practices

Content-heavy solutions are not always the answer when it comes to creating behavior change. Here's why...

22 February 2021 | Fast Facts

4 Essential Skills for the Workforce of the Future—and How to Instill Them

Creativity, complex reasoning, and profound socio-emotional intelligence are fast becoming essential skills.

18 February 2021 | Opinion Piece

How Reflection Helps Us Learn, According to Science

When it comes to learning, simply memorizing content isn't the most effective strategy. Reflection is a necessary element.

16 February 2021 | Opinion Piece

How to Get Your Team Rowing to the Same Rhythm and Boost Results

A team that works together will be effective, but one that can achieve a state of flow will achieve a huge boost in results.

15 February 2021 | Fast Facts

3 Reasons Change Management Plans Fail—and How to Avoid Them

For organizational change to take hold effectively, one vital element must be present: reflection. Here's why...

27 January 2021 | Feature

7 Lessons 2020 Taught Us About Change Management

2020 was nothing short of disruptive, but in hindsight, we have gleaned some important lessons. Here are some insights...

21 October 2020 | Feature

How to Activate Behavior Change at Work

How do you drive behavior change at work and make it stick? This framework explains the impact of digital learning experiences.

20 October 2020

7 Reasons Reviewers Say Cognician Activates Behavior Change

From learning that sticks to enabling growth, activating impactful behavior change is crucial to successful change management.

![Activate Success in Your Team through Mentoring with These Three Actions [For Leaders]](https://info.cognician.com/hubfs/Blog%20Post%20Images/2022%20blog%20post%20images/220201-february/220201%20mentorship-feature.png)